Binance seems to be listing several new cryptocurrencies each week. As a means of taking advantage from the significant spike in the price of new coin listings on Binance, I have been testing an open source crypto trading bot in Python that detects new coins the moment they’re listed and attempts to quickly place an order for quick gains.

The tool is still in test mode, however I’ve recently added a new bit of functionality to it that will allow you to receive e-mail notifications when Binance makes the announcement on their page. This will give you precious time to actually buy the coin externally before it gets listed on Binance.

This article will cover how this tool actually works and how you can implement it yourself.

Check out this video if you want to find out more about how this tool can be used alongside the crypto trading algorithm that I’ve been working on. If this is the kind of content you were hoping for, please subscribe!

Ok, now that you know how this links to the bigger strategy, let’s get down to coding!

Install Python and dependencies

If you’re just starting out, the first thing you want to do is install Python on your machine. Simply head over to https://www.python.org/downloads and find a suitable version for your OS.

Now, in order to be able to scrape the Binance Announcement page, and get information about new coin listings ahead of time, we need a scraping tool. We’re going to use Selenium – but first, we need to be able to install Selenium or any other Python packages, and for that we need to install Pip.

Open up your cmd or terminal, and type the following command in:

Alternatively, if you’re using a Mac or Linux system, you may use this:

Once pip is installed, we can start installing Selenium and pyaml:

Now do the same with pyaml – this will enable us to read and write yml files (more on that in a bit).

Create a directory and start coding

With the preparation work out of the way, we can now create a new directory where our Python scraper can live. We’re also going to create a few different Python files, in order to keep our script nice and neat:

- store_listing.py – helps store new coin symbols from Binance announcements locally

- config.yml – we need to store our e-mail credentials somewhere.

- load_config.py

- send_notification.py – will contain the script to send us an e-mail when a new Binance announcement is made

- new_listings_scraper.py – the script for scraping the Binance Annoucement page

Store listings in a json file

We’re going to need two simple functions in order to be able to store the symbol of the last coin announced by Binance. def store_listing() will create a simple json file, that will store anything we pass in under the symbol argument. In our case, the symbol of the newly announced listing.

Just as a side note though, this function can be re-used in any number of ways in different scripts, to store information locally, so you might want to make a note of that for when you need to write and save something in Json format.

The second function will find and load an existing json file. We’ll use this to replace the current values in our json file with new ones as future Binance listings get announced. Again – this function can be used for anything.

Send e-mail notifications with Python and smtplib

The next step is to create a script that will send an e-mail notification. Don’t worry if you can’t see how this all ties together, I promise it will all make sense in the end.

Let’s start by creating a config.yml file. Here is where you would store your email address and password. Can be any e-mail address, so you may create a burner one if you’re not comfortable doing this on your main one.

The next step is to create a simple load_config.py file that will load our credentials from config.yml. Note that we’ve imported the yaml module that we installed earlier via pip.

Ok now for the actual sending of the e-mail notification, I promise.

Let’s create a file called send_notification.py. In the new file let’s import the smtplib and ssl. We’re also going to import our load_config file that we created above.

Next, we assign the load_config function to a variable called config. This will contain the login details stored in config.yml.

For the actual send – create a function called send notification that takes in one argument (coin).

We’re going to use port 465 and the gmail smtp. Note that Gmail might restrict you from logging in via Python, you may have to enable less secure login from the Gmail settings in order to get this to work.

sent_from and to is our e-mail address as stored in the config file.

Subject – you will notice that we’ve wrapped the subject in f-strings and we’re passing the {coin} variable in. This will contain the symbol listed by Binance, once we include this function in our main scraper file.

In the e-mail body we’ll include a link to the Binance Announcements page as well as a google search for the new symbol so that we can easily find out where we can buy it from ahead of it being listed on Binance.

Finally, we’ll use a try and except block in order to trigger an e-mail. We’re now ready to create a Binance scraper in Python using selenium.

Creating the Binance announcements scraper to detect new symbols

The first thing you need to do is download chromedriver. Chromedriver is user by Selenium in order to scrape web pages. Make sure that you download Chromedriver v94, as v95 won’t work with selenium. If you’re having trouble with this, read the error log and download the appropriate version as required. Download here.

Now we’re ready to crate our new_listings_scraper.py file and import some requirements:

Here we’ve imported several functions from the selenium library, along with a binary_path for chromedriver, to automatically get the correct path for our driver. Note, you may need to pip install chromedriver_py. We’re also importing os.path and json.

In addition to this, we’re also importing the files that we created previously: store_listing, load_config and send_notification.

Now for the actual scraping of the Binance Announcements page:

Firstly, we assign Options() to chrome_options and we then add_argument(“–headless”). This will hide the actual browser when scraping so you won’t see it on your screen. If you want to see how the scraping happens simply #comment out this line.

We then assign the binary path for our driver, and we give it the URL we want it to scrape by using the driver.get(“URL”) method.

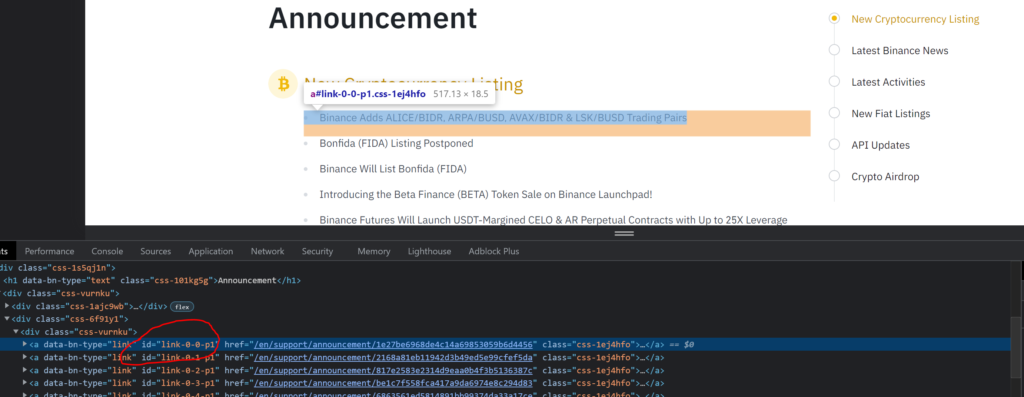

The next step is to create a function that does the scraping. We want to only get the last announcement, and we can do this by targeting the id of the first element with selenium by using latest_announcement = driver.find_element(By.ID, ‘link-0-0-p1’).

As we’re only interested in new coin listings, we’re going to create a brief list called exclusions where we will be excluding any announcements that contain the words futures, margin or adds – feel free to edit this as you see fit.

Next we need to enumerate for every character in our latest announcement string. Using index won’t work since index returns the first position where an element is located. For example letter a will be used multiple times in our string, yet its index will always be the position where a is first used. Once the enum list is ready, it’s time to work some filter magic.

We could just return the entire string “Binance adds…” but, for multiple reasons, we only want the symbol of the new coin to be added. By just getting the symbol, we can automate a system that automatically feeds this symbol to trading algorithm that places the order for us in Binance.

This is where we’re filtering through letters and trying to select only uppercase characters that are close together, and are followed by a space or a “)”. This is how that logic looks like:

uppers = ”.join(item[1] for item in enum if item[1].isupper() and (enum[enum.index(item)+1][1].isupper() or enum[enum.index(item)+1][1]==’ ‘ or enum[enum.index(item)+1][1]==’)’) )

If you start seeing a weird sequence of symbols in your notifications so make sure to add more exclusions for announcements that do not strictly mention a coin listing.

Next we need to define two more functions. One that will store the symbol in a local json file called new_listing.json and another function that will run all the necessary functions – let this be main()

The main function will get the last coins, and store the new listings, while the store_new_listing function calls the send_notification() function and will send ourselves an e-mail when appropriate.

Now let’s run this:

Enjoyed looking over your articles for a few weeks now, since discovering you on a long train journey 🙂

I use .Net, but many of the ideas easily translate. Thought I’d give a little back. On looking at the announcements page, i realised that skipping thro’ the page selector MIGHT invoke some ajax queries and thus produce some json. lo and behold, it does just that. So, to get the json for our first announcements page, we’d simply use:

https://www.binance.com/bapi/composite/v1/public/cms/article/catalog/list/query?catalogId=48&pageNo=1&pageSize=15

Hope you find this useful as it will allow you to use the json directly without needing to scrape the page -enjoy.

cheers

jim

Hey Jim,

Thanks a lot for your feedback and I really appreciate you enjoying the content. That’s a really cool discovery, thank you for sharing!

How did you come across this in the first place?

Andrei – in my *day job* (cough), we have to harvest a LOT of data from various TV platforms and one of my tasks is always to look for hidden API’s that supply json data to populate the page under the covers. I use a tool called Fiddler to do this which takes away 100% of the guess work.

Of course, not all sites are friendly enough to let you access this json api – some require tokens and http posts etc, but looks like binance is happy to use a standard get request with no caveats. Using Fiddler, you’ll discover a LOT of these little things, they have certainly made my life a lot easier over the years!!

Hope this helps in your future work – always happy to assist moving fwd.

Amazing, thanks again. I will definitely use this in my next projects.

Hi Jim and Andrei!

Thanks for the wonderful information you provided!

Did you happen to know about another hidden API for other crypto exchanges like coinbase/ kraken/ binance.us or huobi?

No not really but you could use Fiddler or the Network Tab in the inspect section to find out where these pages get their data from

Hi, can you tell me how to use fiddle to find the hidden url, I didn’t find the request hidden by binance after I used it, thank you

Hi, I am having trouble at this line

line 36, in

uppers = ”.join(item[1] for item in enum if item[1].isupper() and (enum[enum.index(item) + 1][1].isupper() or

IndexError: list index out of range

It is a small issue with the code…. but I am not able to figure out a solution…. please help.

Is there any solution for the following alert

[1008/230143.115:INFO:CONSOLE(42)] “Refused to execute inline script because it violates the following Content Security Policy directive: “script-src blob: ‘self’ https://cdn.ampproject.org https://bin.bnbstatic.com https://public.bnbstatic.com ‘nonce-6a7bfef8-e074-4415-abd4-8331be0264a1’ https://accounts.binance.com https://www.googletagmanager.com

Wonderful content, thanks for sharing! Did you face any delays while scraping the page? I am facing a 1-2 minutes delay between when the content was actually published on the webpage and when the scraper detected it.

Hi Andrei,

really enjoy your detailed guide so far. I noticed that there is still a delay between the link that JimTollan provided and the actual timestamp of the news article. Did you find any better source to scrape info from?

Ok so i created all the files with the code in them, how do I run this and test it?

Also, the end

if __name__ == “__main__”:

main() where does that go? in te new_listing json file?

Would also like to know please. Total Python Newbie. How to actually run the files?

Hi Andrei!

I have these errors:

Traceback (most recent call last):

File “D:\binance-trading-bot-new-coins-main\binance-trading-bot-new-coins-main\main.py”, line 4, in

from new_listings_scraper import *

File “D:\binance-trading-bot-new-coins-main\binance-trading-bot-new-coins-main\new_listings_scraper.py”, line 15, in

driver = webdriver.Chrome(executable_path=binary_path, options=chrome_options)

File “C:\Users\Vlad\AppData\Local\Programs\Python\Python310\lib\site-packages\selenium\webdriver\chrome\webdriver.py”, line 69, in __init__

super(WebDriver, self).__init__(DesiredCapabilities.CHROME[‘browserName’], “goog”,

File “C:\Users\Vlad\AppData\Local\Programs\Python\Python310\lib\site-packages\selenium\webdriver\chromium\webdriver.py”, line 93, in __init__

RemoteWebDriver.__init__(

File “C:\Users\Vlad\AppData\Local\Programs\Python\Python310\lib\site-packages\selenium\webdriver\remote\webdriver.py”, line 266, in __init__

self.start_session(capabilities, browser_profile)

File “C:\Users\Vlad\AppData\Local\Programs\Python\Python310\lib\site-packages\selenium\webdriver\remote\webdriver.py”, line 357, in start_session

response = self.execute(Command.NEW_SESSION, parameters)

File “C:\Users\Vlad\AppData\Local\Programs\Python\Python310\lib\site-packages\selenium\webdriver\remote\webdriver.py”, line 418, in execute

self.error_handler.check_response(response)

File “C:\Users\Vlad\AppData\Local\Programs\Python\Python310\lib\site-packages\selenium\webdriver\remote\errorhandler.py”, line 243, in check_response

raise exception_class(message, screen, stacktrace)

selenium.common.exceptions.SessionNotCreatedException: Message: session not created: This version of ChromeDriver only supports Chrome version 94

Current browser version is 96.0.4664.45 with binary path C:\Program Files\Google\Chrome\Application\chrome.exe

What am I doing wrong?

Hey, nice work mate ! I have some questions :

print(“Checking for coin announcements every 2 hours (in a separate thread)”)

How can we check for coin announcements every 10 minutes ?

And where did you put the code to start the thread ? Could you explain me theses options ?

“driver = webdriver.Chrome(executable_path=binary_path, options=chrome_options)”